Is Open Source AI exempt from transparency requirements?

Is Open Source AI exempt from transparency requirements?

In principle, the obligations of the AI Act do not apply to AI models released under free and open source licences. But what exactly is Open Source and what exceptions apply? In this blog, we will walk you through it.

Definition

One clear, fixed definition of "open source" is not given in the AI Act, but we can find some clues in the text of the Regulation:

- General purpose AI models released under a free and open source licence are deemed to ensure high levels of transparency and openness by disclosing their parameters, including weights, model architecture information and model usage information.

- A licence is deemed free and open source when users can run, copy, distribute, study, modify and improve the software and data, including models, provided the original supplier of the model is mentioned and identical or similar distribution conditions are respected.

Exceptions

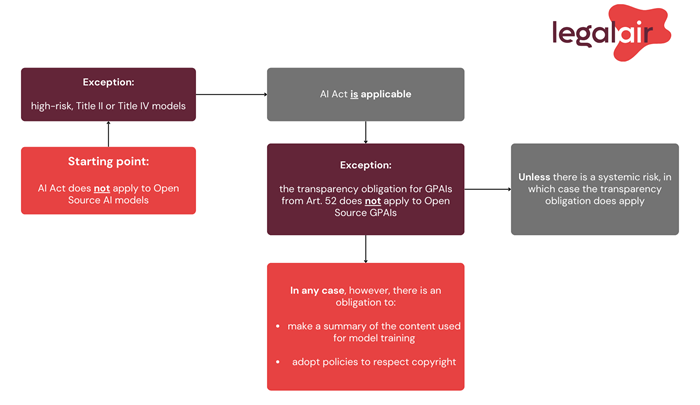

As mentioned, the obligations of the AI Act in principle do not apply to AI models released under free and open source licences (or 'Open Source AI models). This is different if the Open Source AI model is marketed or put into use as a high-risk AI system or if it is an AI system covered by Title II or IV. These include prohibited AI applications, GPAI models and 'certain AI systems' (such as AI systems that generate or manipulate image, audio or video content to create a deep fake, emotion recognition systems and biometric categorisation systems).

To complicate matters a little, the exception for GPAI models (which makes the AI Act applicable to Open Source AI models after all) is again subject to an exception found in recital 60f of the AI Act:

General purpose AI models released under a free and open source licence (hereinafter: 'Open Source GPAI') 'whose parameters, including the weights, the information on the model architecture, and the information on model usage' are disclosed, are exempt from the transparency requirements imposed on GPAIs (in Article 52 of the AI Act). So: the obligations under the AI Act do apply to Open Source GPAIs, but the transparency obligations do not.

But, when these Open Source GPAIs pose a systemic risk, the above mentioned no longer holds true. The fact that the model is transparent and released under an open source licence is then not sufficient reason to exclude compliance with the obligations of the AI Act.

A systemic risk is a risk specific to GPAIs that has a major impact on the internal market because of its scope, and with actual or reasonably foreseeable negative effects on public health, public safety, public security, fundamental rights or society as a whole, which may spread widely along the value chain.

It may therefore be the case that an Open Source GPAI poses a systemic risk, making the transparency obligations of Article 52 applicable after all.

Moreover, Open Source GPAIs do not necessarily reveal substantial information about the dataset used to train or tune the model. The same applies to information about how copyright compliance was ensured in the process. The transparency exception for Open Source GPAIs, therefore, does not apply with respect to the obligation to summarise the content used for model training and the obligation to put in place a policy with respect to Union copyright law.

This means that in any case, therefore, even if there is no systemic risk, an Open Source GPAI will thus have to make a summary of the content used for the model training and implement a policy to respect copyright.

Put together in a schematic, it looks like this:

Furthermore, developers of Open Source tools, services, processes or AI components other than GPAI are encouraged to adopt generally accepted documentation practices, such as model maps and data sheets, as a way to accelerate information sharing along the AI value chain, enabling the promotion of reliable AI systems.

Why are Open Source AI models exempted?

Software and data, including models, that are released under an open source licence allowing them to be shared openly and users to freely access, use, modify and distribute them or modified versions of them, can contribute to research and innovation in the market and can provide significant growth opportunities for the Union's economy. This is a trade-off made by the European legislator to avoid a chilling effect on open source development.

An open end

While Open Source AI models are in principle exempt from the obligations of the AI Act, there are also important exceptions. Especially when these systems are considered high-risk or fall under specific categories that have stricter transparency and compliance requirements. The exemption of Open Source AI models underlines the importance the European legislator attaches to promoting transparency, innovation and growth within the AI sector.

It is important for developers and users of Open Source AI models to be aware of the relevant legal frameworks and the value of best practices in documentation and compliance, even in the open source context. Do you have questions about the impact of the AI Act on Open Source AI systems, the specific exceptions or how to determine if your AI system is high-risk? Contact Jos van der Wijst.

Details

More questions?

If you were not able to find an answer to your question, contact us via our member-only helpdesk or our contact page.