The EU AI Act Pyramid of the Risk-Based Approach

The EU AI Act Pyramid of the Risk-Based Approach

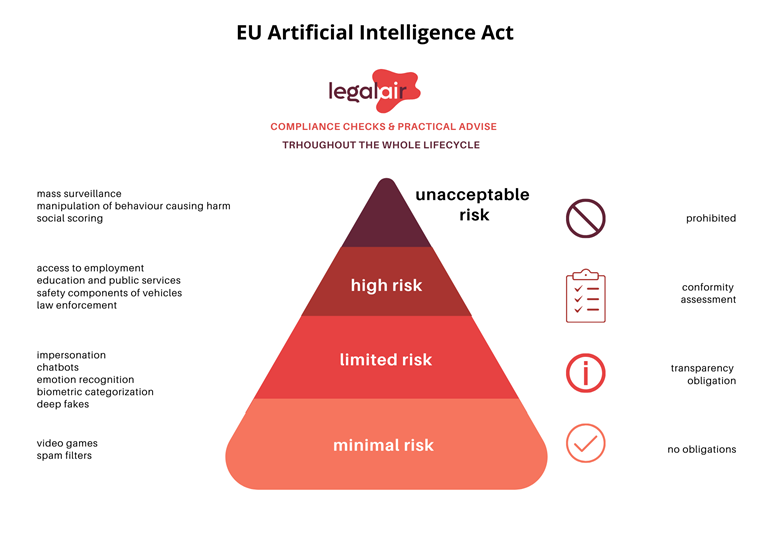

The EU AI Act has a risk-based approach that categorises AI applications into different levels of risk, determining the extent of regulatory oversight required. Here's a closer look at the key aspects of this approach:

Four-level risk framework

The AI Act introduces a four-level framework to classify AI risks:

- Unacceptable Risk: banned AI practices, like social scoring by governments;

- High Risk: AI systems used in critical infrastructures, law enforcement, etc., requiring strict compliance obligations;

- Limited Risk: AI systems with specific transparency obligations, like chatbots; and

- Minimal or No Risk: AI applications such as video games or spam filters, which are largely unregulated.

The pyramid (which can be downloaded on the right) shows this categorisation at a glance. The pyramid categorises AI systems into four groups, each defined by the level of potential harm they could cause to users and society. We will now explain a bit more about the first three categories.

1. Top of the pyramid: the no-go zone for AI

At the very top, we have the 'Unacceptable Risk AI Systems'. In essence, this represents the EU establishing firm boundaries, declaring a categorical prohibition on certain types of AI. This encompasses AI technologies that could subtly influence human cognition or specifically target vulnerable demographics. Moreover, the EU firmly rejects the utilization of AI for purposes such as governmental social scoring.

2. The middle tier: handle with care

Moving down a notch, we have the 'High Risk AI Systems'. These aren't outright banned, but they're watched very closely. If your AI development aligns with this category, it is important to ensure its safety and effectiveness. This particularly applies to technologies such as facial recognition and AI applications in critical sectors, including healthcare and law enforcement. Essentially, the EU's message is clear: such developments may proceed for the sake of innovation, but they will be under strict scrutiny.

3. The base layer: keep it transparent

Just above the bottom, we have the 'Limited Risk AI Systems'. These fall under more routine AI applications, but they must adhere to an essential requirement; they must maintain utmost transparency in their operations. Consider AI that engages in conversation or has the capability to create highly realistic content, such as deepfakes. The guiding principle in this scenario is ensuring that individuals are always aware when they are interacting with AI. The emphasis is on maintaining integrity and preventing any form of deception.

What this all means

The big takeaway? The EU is setting the stage for how we should think about and handle AI. It tells us to avoid certain areas, exercise with extreme caution in other cases and maintain authenticity even if the risk is limited.

Regulatory evolution and international perspectives

This strategy adopted by the EU is quite innovation-friendly as it goes beyond merely imposing limitations on AI technology. Instead, it aims to steer the development of AI towards a path that is both secure and ethical. It allows for rapid advancement in AI innovations while ensuring that these developments do not stray into hazardous territory. Besides that, the AI Act's future-proof approach allows for adaptation to technological changes.

Globally, there's consensus on a risk-based approach, with frameworks like the U.S. National Institute for Standards and Technology's AI Risk Management Framework potentially aligning with the EU's approach. The EU's proactive stance sets a precedent for global AI policy, offering a template that could shape future regulations worldwide. It is therefore wise to keep up-to-date with developments on the AI Act or ask for advice by contacting us via info@legalair.nl.

For more information on when AI systems are considered high risk, see this blog and for the specific obligations and requirements, also take a look at this blog.

Details

More questions?

If you were not able to find an answer to your question, contact us via our member-only helpdesk or our contact page.