Agreement on the AI Act

Agreement on the AI Act

The European Parliament, the Member States and the European Commission have agreed on the text of the new AI Regulation (AI Act).

What does it say?

Prohibited uses

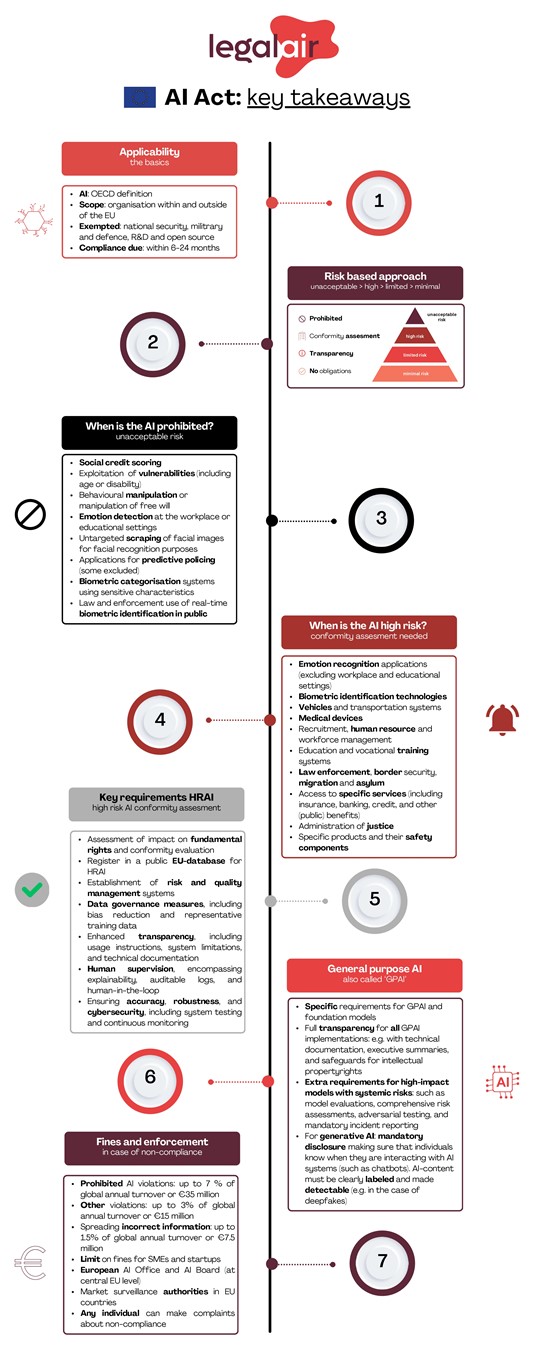

A number of AI applications have been banned, such as:

- The indiscriminate scraping of facial images from the internet or CCTV footage to create facial recognition databases. This therefore includes practices such as those of Clearview AI.

- Emotion recognition in workplaces and educational institutions. With exceptions such as AI systems that recognise that a driver has fallen asleep.

- AI used to exploit people's vulnerabilities (because of their age, disability, social or economic situation).

- AI systems that predict on an individual basis the risk of committing crimes in the future (predictive policing software)

- Under strict conditions, authorities will be allowed to use real-time facial recognition systems to search for individuals in public spaces. The list of crimes is large and includes terrorism, human trafficking, sexual exploitation, murder, kidnapping, rape, armed robbery, participation in a criminal organisation and environmental crime.

High risk

AI systems should be assigned to a risk category. This will have to be done by the supplier/developer based on the intended use. AI systems classified as high-risk are listed on an annex to the AI Act. For these AI systems, a human rights Impact Assessment (IAMA) will be mandatory. This obligation only applies to government agencies and private entities providing services of public interest, such as hospitals, schools, banks and insurance companies.

High-risk systems must meet obligations such as a risk management systems, cyber security and human supervision.

For most AI systems, 'self-assessment' will be required to determine:

- Whether an AI system falls within the scope of the AI regulation

- In which risk category an AI system falls

- Whether an AI system meets the requirements of that risk category.

In a consortium led by LegalAIR, we are working on an AI Compliance Check. In doing so, we are making 'vague' standards testable and measurable. For this, we are following the work of the standardisation bodies working on this.

General purpose AI systems

General purpose AI systems, such as ChatGPT, will have to be transparent about how they comply with the AI Act. This includes providing technical documentation, respecting copyrights and making a detailed statement of all the material used to train the model. And not just by the provider of the GPAI system, but also by parties who have contributed to the development process at some point. So the whole chain.

AI regulatory sandbox

SMEs are supported in developing AI systems that are compliant with the AI Act. For this, governments have to start working with the AI regulatory sandbox. This is a closed environment in which (certain aspects of) an AI system, under the supervision of a regulator, can be tested. Another form is real-world testing. Norway and the UK already have this tool; how this will work has yet to be fleshed out in concrete terms. Such as which AI systems qualify, what information should be shared with the regulator, what information will be made public later, is the regulator willing to think constructively to make the AI system compliant, etc.

Fines

High fines are attached to acting in violation of the AI act. Fines of up to EUR 35 million or 7% of global turnover can be imposed.

When will the AI Act come into force?

The final text will now have to be translated into all EU languages and adopted by the European Parliament. The AI Act will then enter into force two years after the date of publication. But some obligations will already be in force a year earlier. Such as obligations for high-risk AI systems, powerful AI models, the bodies conducting conformity assessments and the governance chapter.

What does this mean in the meantime?

It would not be wise to wait until two years from now, to start working on being compliant.

What should developers of AI systems do:

- Assess which risk category their AI system, based on the intended use, falls into

- Assess whether the AI system meets the requirements of that risk category

- When there is discussion possible about whether it is a prohibited or high-risk category AI system, have this determined by a third party (AI risk assessment)

- When it is a high-risk AI system, prepare that it will be compliant with the requirements set by the AI Act

- If it is unclear how the AI Act should be interpreted for your AI system, or any aspect of it, consider applying for an AI regulatory sandbox or for real-world testing.

What should buyers/users of AI systems do:

- Take stock of which AI systems your organisation uses. Ask software vendors whether AI has been incorporated into the software.

- Then assess whether AI systems are being used that fall into the prohibited or high-risk category. If necessary, ask your software supplier for statements/guarantees that no prohibited and/or high-risk AI systems are deployed.

- If you are using a prohibited AI system, phase it out. Look for alternatives and end the use of this AI system.

- When using a high-risk AI system, assess whether the AI system meets the requirements. Where the AI system does not meet a requirement, initiate a process to ensure that the AI system starts meeting the requirements. If possible, place this obligation on the supplier of the AI system.

- Adjust your procurement terms and conditions. Make sure a hardware/software supplier is transparent about the AI systems it intends to supply and the risk category the AI system falls into. Also ensure the AI system remains compliant with the requirements. The supplier should monitor this throughout the use of the AI system (post-market surveillance) and adjust the AI system if necessary.

How can we help?

- Explanation

The final text of the AI act will be available soon. We can help explain the text. What is meant by 'human oversight'? What does or does not fall under the definition of Artificial Intelligence? What does a self-assessment look like?

- AI risk/impact assessment

There are now several assessments to assess the impact of an AI system. Per use-case, per sector, a risk/impact assessment can look different. For instance, an assessment for an AI application in the health sector will look different from one for an AI application in the transport sector. We can help find a good applicable assessment. We can also help implement it. We can do this through the LegalAIR consortium with partners who are experts in areas such as data science, cybersecurity and data ethics.

- Human Rights and Algorithm Impact Assessment (IAMA)

We are experienced in conducting an IAMA. An organisation can conduct an IAMA itself. However, experience shows that it is convenient and works more efficiently when an IAMA is conducted with an external facilitator.

- AI policy

With the advent of AI tools like ChatGPT and Midjourney, using AI has become more accessible and easier. But who decides which AI tools can be used? And does an organisation have internal ground rules for being allowed to use such AI tools? Does procurement ask for information/guarantees on the operation/development/compliance of AI tools? These issues are addressed in an 'AI policy'. This is a document that we customise for organisations so that AI can be used responsibly in the organisation.

- AI audit

Just as an organisation has its operations assessed for compliance with certain ISO standards, an organisation can also have its AI system assessed for compliance with requirements. Even now, laws and regulations already apply to AI systems. Think privacy (GDPR), human rights (the Constitution and the European Charter), competition, consumer regulations, etc. Now the AI act is added to that. A mapping of risks associated with a company also includes an investigation into risks associated with the use of certain AI systems. For example, as part of due diligence in a merger/acquisition.

We prepare organisations for an AI audit, for example an audit to the audit rules of the non-profit organisation ForHumanity. Jos van der Wijst is FH auditor.

For more information: contact Jos van der Wijst (wijst@bg.legal).

Details

More questions?

If you were not able to find an answer to your question, contact us via our member-only helpdesk or our contact page.